Cullen International has updated a benchmark on national initiatives on age-verification systems to control or restrict the exposure of minors to harmful content (such as pornographic content and gratuitous violence) on internet platforms.

If initiatives exist (beyond the mere transposition of the EU level rules), it shows:

- their aim and scope of application;

- if a specific type of technology is mandated or foreseen (photo identification matching, credit card checks, facial estimation…);

- if the system is applicable to service providers established in other member states;

- a description of the main aspects/ rules.

Background

The Audiovisual Media Services (AVMS) Directive requires audiovisual media services and video-sharing platforms (VSPs) to take appropriate measures to protect minors from content that can impair their physical, mental or moral development. For VSPs, measures should consist of, as appropriate, setting up and operating age verification systems or (easy to use) systems to rate content. It is up to the relevant regulatory authorities to assess whether measures are appropriate considering the size of the video-sharing platform service and the nature of the service that is provided.

The Digital Services Act (DSA) requires platforms that are accessible to minors to take measures to ensure children's safety, security and privacy. It also requires very large online platforms (VLOPs) and very large online search engines (VLOSEs) to assess the impact of their services on children’s rights and safety, and take mitigating measures if needed, which may include age verification.

Findings

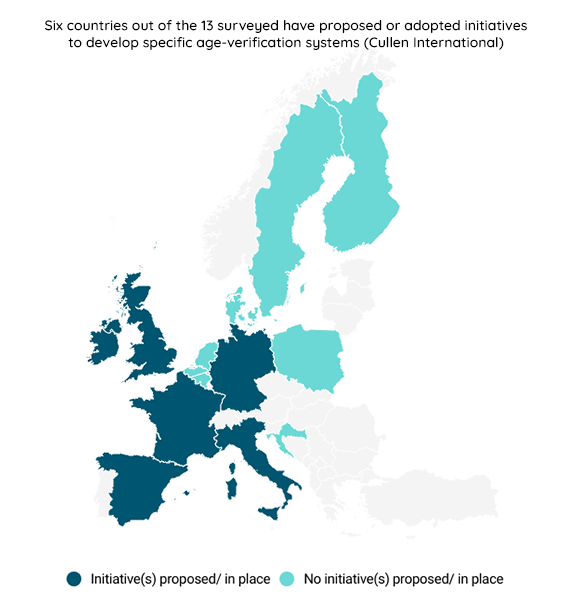

The benchmark shows that among the countries covered, France, Germany, Italy, Ireland, Spain and the UK have initiatives (in place or proposed).

Among other findings, the benchmark shows that France is tackling the question from a technical and from a regulatory level. On the technical level, the ministry in charge of the digital sector announced the launch of a test phase of a technical solution (based on the double anonymity principle) specifically aimed to block minors’ access to pornographic content. On the regulatory level, it is a criminal offence in France for any provider not to have a reliable technical process to prevent minors from accessing offending content. Legislation has also been adopted to adapt French law to the DSA which has allowed Arcom to set binding standards on the technical requirements for age-verification systems.

In Germany, legislation prohibits pornographic content (as well as listed or clearly harmful to minors) from being distributed online unless providers ensure that only adults can access this content by creating closed user groups. Providers use age verification systems to control these closed user groups.

In Ireland, the rules apply to VSPs that host adult content and the focus is on ensuring that age verification is effective rather than on specifying a particular method.

For more information and access to the benchmark, please click on “Access the full content” - or on “Request Access”, in case you are not subscribed to our European Media service.

more news

27 January 26

Global trends in digital policies and regulations to watch in 2026

The geopolitical climate of 2026 will likely influence public policies for infrastructure for digital connectivity, data-based services and the online platform economy in all parts of the world. Our global trend report aims to briefly comment on these trends.

26 January 26

The DNA explained: addressing fragmented national approaches to spectrum

Cullen International is issuing a series of analyses on different aspects of the Digital Networks Act (DNA) proposal. This report covers radio spectrum.

23 January 26

Analysis of how UK Cyber Security and Resilience Bill compares to EU NIS2

Our new report analyzes how the UK Cyber Security and Resilience Bill compares to the EU NIS2 DIrective. The UK bill proposes amendments to the existing UK NIS Regulations that would align the UK cybersecurity regime closer with the EU framework established under the NIS2.